SWSV home assignment

Throughout the semester students shall form teams of four and solve a home assignment, in which the course's verification and validation techniques (review, source code analysis, testing...) should be applied to a given software module.

The home assignment consists of three phases, which will be published on this page. In each phase new parts of the module will be shared, and several tasks have to be solved.

Supporting infrastructure (repository)

To solve the assignments GitHub's infrastructure shall be used. The home assignment is available under the FTSRG-VIMIMA01 organization.

After the teams have been formed, a new private repository will be created for each team and each team member will get access to this repository. Team members shall work in this repository. It is forbidden to (1) change the collaborators and teams settings of the repository, (2) to change the name or make the repository public, (3) to delete or transfer the repository's ownership, or (4) to turn on GiHub Pages for the repository.

The instructors will update the ris-2019 repository for each phase of the home assignment. Initially this only contains a folder structure that was copied into each team's repository (note: these are not forks!). When the instructors' repository will be updated, each team has to manually update its repository.

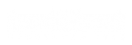

Therefore each team member will work with three repositories (see the figure, 20YY is the actual year). GitHub remotely stores an instance of the instructors' and the teams' repositories (Git is a distributed version control system). When a team member wants to work on some files, she has to clone the team's repository first to her own computer. During the clone operation Git will create a remote called origin that points to the repository instance stored on GitHub (see Working with Remotes). After that another remote should be configured that points to the instructors' repository in order to fetch the updates of the new phases. This configuration will be part of the LAB0 preparations phase, and the team members will have the opportunity to practice the handling of the repositories.

Notes:

- The remote can have any name, it is not mandatory to have the same name as the repository.

- The instructors' repository cannot be written by students, do not push to that repository.

- There are more advanced options (e.g. remote-tracking branch), but use them only if all team members are confident with using Git.

Requirements and grading

-

Roles:

- For every phase the team shall designate a lead. Different members shall take the role of the lead in each phase. The lead is responsible for coordinating the team members and the lead is the team's contact to the instructors for the given phase. The lead shall make sure that the issues for the given phase are closed and every team member finished their job.

- For every phase other roles will be defined. Assigning them to team members and balancing the required work is the responsibility of the current lead. These decisions shall be documented in the team's repository.

-

Teamwork: The subtasks of each phase will be defined by issues opened by the instructors in the team's GitHub repository.

- The progress of the subtask shall be documented in these issues, and all issues shall be finished and closed in order to complete the phase.

- Working on the solution of each subtask should preferably go into a separate Git branch. However, the final solution shall be always merged to the master branch, as only content of the master branch will be evaluated.

- Please use the GitHub infrastructure to communicate and organize the team's work. Use issues for assigning work items, make comments in issues or pull requests to review each other's work, etc. Note: you can use other communication channels to talks about details, but as the instructors will only see the GitHub repository, pay attention to document every major decision or activity in the repository also.

-

Grading criteria

- As this is a course about quality, the quality of the artefacts produced substantially determines the grades. For example, the review documentation should be clear and consistent, the test code written should be properly formatted and easy to understand, and commits should be structured.

-

As grading is based on quality and not quantity, every subtasks is graded on a qualitative scale (e.g. "Poor"/"Fair"/"Good"/"Excellent" or "Not OK"/"OK"), and allocation is done based on the following general guidelines:

- 0-3 points: some subtasks have not been completed at all or major issues with the solution (several "Poor" grades)

- 4 points: minimal-effort solution or several issues with the submitted artefacts ("Poor" grades)

- 5-6 points: all subtasks have been solved with acceptable quality, the team completed what was necessary to do, but not much effort/thought was put into the solution (mostly "Fair" grades)

- 7-9 points: all subtasks have been completed, most of them with average quality (mostly "Fair" and "Good" grades)

- 10 points: all subtasks have been completed with good quality, and at least one of the tasks received "Excellent", meaning that the team has put some effort to come up with an outstanding solution.

- Every artefact submitted for evaluation is required to be written in English, however the command of English language will not be evaluated.

- For every phase the specific grading criteria will be listed on this page.

-

Each team member will get the same points for each phase, unless the team reports different work distribution.

- For some advices about how to manage problematic team members see: "Coping with Hitchhikers and Couch Potatoes on Teams" (see here)

-

Results

- Each team will receive detailed feedback on the home assignments via the team's GitHub wiki.

HA1 Joining the project

| To be published: | 4rd week |

| Deadline: | 2019-10-14 18:00 |

| Goal: | Get familiar with the project by practicing review techniques and development testing of an existing module of the sample system (this module will be used in the subsequent assignment phases). |

| Tasks: | See the issues in the team's repository. |

| Grading: |

|

HA2 Integration testing

| To be published: | 8th week |

| Deadline: | 2019-11-11 18:00 |

| Goal: | Practice integration testing by creating test scenarios for module and platform integration. |

| Tasks: | See the issues in the team's repository. |

| Grading: |

|

HA3 Test generation

| To be published: | 11th week |

| Deadline: | 2019-12-09 18:00 |

| Goal: | Get to know tools generating tests from models or source code. |

| Tasks: | See the issues in the team's repository. |

| Grading: |

|